As previously mentioned about one week ago, a startup company called Optalysis is trying to invent a fully-optical computer that would be aimed at many of the same tasks for which GPUs are currently used. Amazingly, Optalysis is claiming that they can create an optical solver supercomputer astonishing 17 exaFLOPS machine by 2020. Here we look at the wealth of information provided at the Optalysis website.

Optalysys’ technology applies the principles of diffractive and Fourier optics to calculate the same processor intensive mathematical functions used in CFD (Computational Fluid Dynamics) and pattern recognition,” explains founder and CEO Dr. Nick New. “Using low power lasers and high resolution liquid crystal micro-displays, calculations are performed in parallel at the speed of light.”

The company is developing two products: a ‘Big Data’ analysis system and an Optical Solver Supercomputer, both on track for a 2017 launch.

The analysis unit works in tandem with a traditional supercomputer. Initial models will start at 1.32 petaflops and will ramp up to 300 petaflops by 2020.

The Optalysys Optical Solver Supercomputer will initially offer 9 petaflops of compute power, increasing to 17.1 exaflops by 2020.

40 gigaFlops demonstration uses 500×500 pixels working 20 times per second. Each pixel does the work of about 8000 floating point operations in each of the cycles. Speeding up 427 million times to 17.1 exaFLOPS can be done with 500,000 X 500,000 pixels and 8550 cycles per second. They can use multiple LCD displays.

There are soon be $399 4K resolution monitors and are currently available in the $600-700 range. Displays have 60 Hz and 120 Hz speedsbut some have 600 Hz refresh rates. Technologically the refresh can be driven up. There was no need to drive the refresh rate up for human displays but there will be a need for optical computing. 4K monitors usually have 8.3 million pixels (3180X2160). Thirty six thousand 4K monitors would get to 500K X 500 K.

Here are answers on the details of the Optical Computer

The Optalysys technology is pioneering in that it will allow mathematical functions and operations to be performed optically, using low power laser light instead of electricity. These operations may be combined to produce larger functions including pattern recognition and derivatives. Our goal is to provide the step change in computing power that is required in Big Data and Computational Fluid Dynamics (CFD) applications.

The technology will be in the form of enclosed, compact optical systems which can sit on the desktop and are powered from a normal mains supply. They will be driven from a software wrapper and will feature interfaces to commonly used tools like OpenFOAM and Matlab.

As a co-processor, the technology development will initially focus on interfacing with large simulations and data sets to provide analysis of the data as it is produced, providing new levels of capability in areas where the volume of data is such that detailed analysis is not possible. Looking further ahead the technology will be able to the produce the actual simulation data at speeds and resolutions far beyond the capabilities of traditional computing.

How can the Optalysys technology provide such a big improvement over traditional computing?

They use the natural properties of light travelling through tiny liquid crystal micro display pixels that are thousandths of a millimetre across, to form interference patterns. These patterns are the same as certain mathematical operations, which form the basis of many large processes and simulations that the most powerful processor arrays and supercomputers are made specifically to do. However these processes are fundamentally parallel operations, with each resulting data point in the output resulting from a calculation involving all the data points in the input. However, electronic processing is fundamentally serial, processing one calculation after the other. This results in big data management problems when the resolutions are increased, with improvements in processor speed only resulting in incremental improvements in performance. The Optalysys technology, however, is truly parallel – once the data is loaded into the liquid crystal grids, the processing is done at the speed of light, regardless of the resolution. The Optalysys technology we are developing is highly innovative and we have filed several patents in the process.

They are currently progressing through the low Technology Readiness Levels and are aiming to have the first demonstrator ready in November 2014. If all goes to plan we aim to have the first completed systems available by 2016. Commercial partnerships are currently being established which will guide the technology development to meet specific application requirements.

All the components used in the Optalysys systems will be low voltage driven, allowing large processing tasks to be carried at a fraction of the running cost of a large processor array or supercomputer. The current largest supercomputer tianhe-2, reportedly consumes 24MegaWatts of power at peak performance and costs millions of dollars per year to run. In comparison the Optalysys systems will run from a standard mains power supply.

The technology employs the principles of diffractive optics, with high resolution liquid crystal micro displays and low power lasers. Coupled with the novel Optalysys designs, we are able to produce a set of core mathematical operations that can be combined to produce larger functions, at speeds and resolutions behind traditional computing methods.

What is the basis for the Optalysys technology?

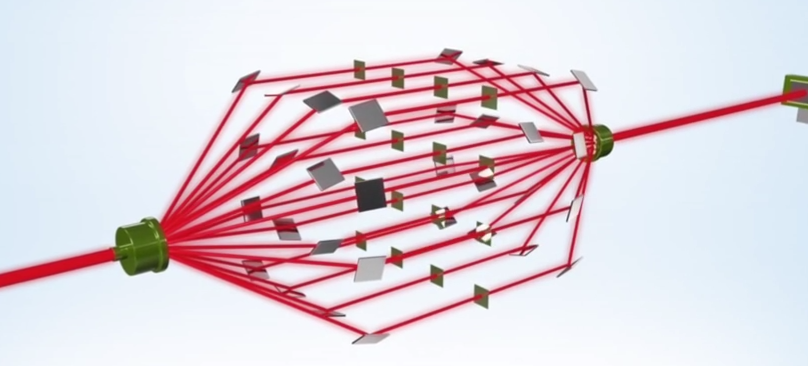

Using diffraction and Fourier Optics, coupled with our novel designs, we are able to combine matrix multiplication and Optical Fourier transforms into more complex mathematical processes, such as derivative operations. In place of lenses, we also use liquid crystal patterns to focus the light as it travels through the system. This means the tight alignment tolerances that exist through the system are achieved through the dynamic addressing in the software.

All modern computers have multiple processor cores which run in parallel – how does the Optalysys approach differ?

For parallel functions, such as the Fourier Transform, each number in the output is the result of a calculation involving every number in the input. Dividing the processing tasks between multiple processor cores for such tasks results in complex data management issues as each processor core must communicate with the others and data must be buffered into local memory. This creates challenging coding problems and only incremental improvements as the resolution is increased.

The Optalysys technology operates truly in parallel, using the natural properties of light and diffraction. Numerical data is entered into the liquid crystal grids (known as SLMs or Spatial Light Modulators) and is encoded into the laser beam as it passes through. The data is then processed together as the beam is focussed or passes through the next optical stage. Increasing the resolution of the data is achieved through adding more pixels to the SLM, but the process time, once the data is addressed, remains the same regardless of the amount of data being entered. The Optalysys approach therefore provides a truly scalable method of producing large calculations of the type used in fluid dynamics modelling (CFD) and correlation pattern recognition.

How is the Optalysys technology benchmarked against electronic methods?

The simplest benchmark to use is the FLOPs (Floating Point Operations per second) benchmark used for electronic processors, although this is not an ideal comparison as it does not take into account the supporting infrastructure requirements, or the specific process it is being judged for. Roughly speaking, a two-dimensional FFT (Fast Fourier Transform) process takes n^2log(n) operations, where n is the number of data points in the grid. Based on this, our first demonstrator system, which will operate at a frame rate of 20Hz and resolution 500×500 pixels and produce a vorticity plot, will operate at around 40GFLOPs. However, this will be scaled in terms of both frame time, resolution and functionality, to produce solver systems operating at well over the PetaFLOP rates that supercomputers are quoted at – leading to ExaFLOP calculations and beyond.

Optalysys technology is quick at CFD calculations, so what?

Optical techniques can make all stages of the CFD process more effective. We are far from a pure optical CFD solver but it can help us generate, and learn from data better. We are always thinking about the full CFD process and learning. This leads to big data, again another exciting opportunity.

Spectral methods are not used in many commercial CFD codes. Will optical technologies change this?

It is not easy to say how optical technologies will drive the future of CFD. Spectral methods are wide spread in weather analysis, large research organisations and academia. Our focus is to provide optical technologies that add complementary technology to what already exists.

Will you be developing an optical CFD solver?

That would be the ultimate project to get involved with and we would love to partner up to do this. Our focus is to prove initially that optical technologies can improve and complement what is already being done digitally.

Do the optical numerics offer anything different mathematically?

Absolutely. Optical technology represents point data using light and gives us access to spectral non-local operations instantaneously. This is potentially very important because we might be able to make linear algebra on digital computers more efficient and more accurate. For example, we can do matrix multiplications or convolutions operations at the speed of light.

If you liked this article, please give it a quick review on ycombinator or StumbleUpon. Thanks

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.