Today Anwar al-Awlaki was killed by a U.S. drone strike. Awlaki, identified by U.S. intelligence as “chief of external operations” for al Qaeda’s Yemen branch and a Web-savvy propagandist for the Islamist cause, was killed in an attack by missiles fired from multiple CIA drones in a remote Yemeni town.

The US is continuing to improve the capabilities of their drones.

Danger Room – Progeny just started work on their drone-mounted, “Long Range, Non-cooperative, Biometric Tagging, Tracking and Location” system. The company is one several firms that has developed algorithms for the military that use two-dimensional images to construct a 3D model of a face. It’s not an easy trick to pull off — even with the proper lighting, and even with a willing subject. Building a model of someone on the run is harder. Constructing a model using the bobbing, weaving, flying, relatively low-resolution cameras on small unmanned aerial vehicles is tougher still.

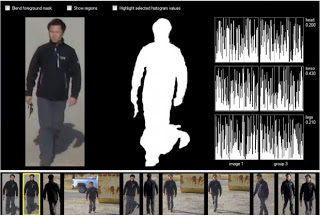

If the system can’t get a good enough look at a target’s face, Progeny has other ways of IDing its prey. The key, developed under a previous Navy contract, is a kind of digital stereotyping. Using a series of so-called “soft biometrics” — everything from age to gender to “ethnicity” to “skin color” to height and weight — the system can keep track of targets “at ranges that are impossible to do with facial recognition,” Faltemier says. Like 750 feet away or more.

But if Progeny can get close enough, Faltemier says his technology can even tell identical twins apart.

Tesearchers from Notre Dame and Michigan State Universities collected images of faces at a “Twins Days” festival. Progeny then zeroed in on the twins’ scars, marks, and tattoos — and were able to spot one from the other.

The Pentagon isn’t content to simply watch the enemies it knows it has, however. The Army also wants to identify potentially hostile behavior and intent, in order to uncover clandestine foes.

Charles River Analytics is using its Army cash to build a so-called “Adversary Behavior Acquisition, Collection, Understanding, and Summarization (ABACUS)” tool. The system would integrate data from informants’ tips, drone footage, and captured phone calls. Then it would apply “a human behavior modeling and simulation engine” that would spit out “intent-based threat assessments of individuals and groups.” In other words: This software could potentially find out which people are most likely to harbor ill will toward the U.S. military or its objectives. Feeling nervous yet?

If you liked this article, please give it a quick review on ycombinator or StumbleUpon. Thanks

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.