Here is a pdf of the announcement of Nvidia 2 to 8 teraflop Tesla desktop supercomputer

UPDATE:

New desktop supercomputers are now available from Nvidia partners and there will be AMD Firestream competition. Four Tesla boards in one desktop machine for 4 teraflops of single precision power for less than $10,000 and 400 gigaflops of double precision.

END UPDATE

var pubId=12340;

var siteId=12341;

var kadId=18004;

var kadwidth=336;

var kadheight=280;

var kadtype=1;

Pricing for the Tesla GPU would start at $1,499 and the deskside computer at $7,500.

Nvidia Tesla product overview The systems have fast memory access with 76.8 GB/sec.

The Tesla C870 one GPU card $1499.

The deskside Tesla D870 supercomputer with two x8 series GPUs, needs 550W of power, 1 teraflop will cost $7,500 beginning in August. 3 Gigabytes of system memory (1.5 GB per GPU) With multiple deskside systems, a standard PC or workstation is transformed into a personal supercomputer, delivering up to 8 teraflops of compute power to the desktop. Eight of the deskside systems would cost $60,000 to deliver 8 teraflops.

Future versions of the deskside system will be able to provide up to four Tesla GPUs per system, or eight Tesla GPUs in a 3U rack mount. [From the Nvidia technical briefing pdf]

The GPU computing server blade Tesla S870 will have a retail price of $12,000, four GPUs, 2 Teraflops and use 550 watts. This is a 1U GPU computing server. With four to eight GPUs in a 1U form factor, GPU computing with the highest performance per volume per watt will be possible. When the 8 GPUs would be 4 teraflops and likely will be around $18,000 (estimate). Four of the 4-way S870s would be $48,000 and would total 8 teraflops in power.

A 12 Teraflop system (24 GPUs) that will cost $60,000-70,000 will be selling soon from Evolved Machines.

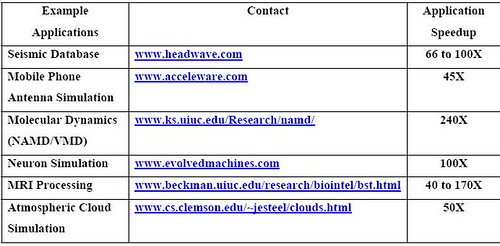

How much it can speed up certain applications. Note: molecular dynamics 240 times faster

The Tesla GPU (graphics processing unit) features 128 parallel processors and delivers up to 518 gigaflops of parallel computation. A gigaflop refers to the processing of a billion floating point operations per second. Nvidia envisions the Tesla being used in high-performance computing environments such as geosciences, molecular biology, or medical diagnostics.

Nvidia also will offer Tesla in a workstation, which it calls a Deskside Supercomputer, that includes two Tesla GPUs, attaches to a PC or workstation via a PCI-Express connection, and delivers up to 8 teraflops of processing power. A teraflop is the processing of a trillion floating point operations per second.

A Tesla Computing Server puts eight Tesla GPUs with 1,000 parallel processors into a 1U server rack.

Tesla GPU computing processor, deskside supercomputer, and GPU Computing server

As recently as 2005, the general price points were roughly $1000 a gigaflop in common supercomputer configurations. In 2004, the DOE spent $25 million for a Cray system rated at 50 teraflops

Wikipedia cost of computing tracking

2000, April: $1,000 per GFLOP, Bunyip, Australian National University. First sub-US$1/MFlop. Gordon Bell Prize 2000.

2000, May: $640 per GFLOPS, KLAT2, University of Kentucky

2003, August: $82 per GFLOPS, KASY0, University of Kentucky

2005: about $2.60 ($300/115 GFLOPS CPU only) per GFLOPS in the Xbox 360 in case Linux will be implemented as intended

2006, February: about $1 per GFLOPS in ATI PC add-in graphics card (X1900 architecture) – these figures are disputed as they refer to highly parallelized GPU power

2007, March: about $0.42 per GFLOPS in Ambric AM2045

UPDATE: This is amazing. But not all FLOPS are equal.

For graphics and problems similar to graphics then Moore’s law is being shattered.

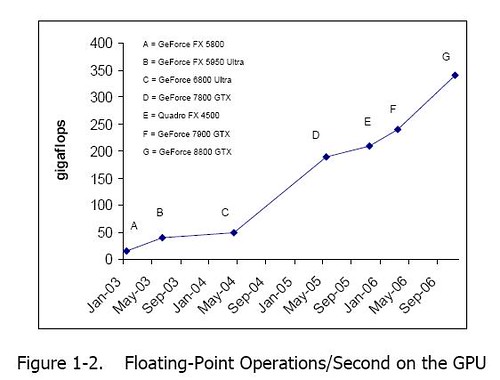

Graphic of Nvidia kicking Moore’s Law butt. 330 GFlops for the 8800 and 1 Teraflop for the G92 expected at christmas, 2007

Specialized processing systems like the Japan’s petaflop MDGrape3 machine can be lot faster for particular problems.

If the problems that you are interested in are accelerated, then things are getting a lot better faster.

If the computational chemistry software packages get a big speed boost then this is big for molecular nanotechnology.

FURTHER READING

My follow up article with more specs and more links

TG Daily has some more details on this new Nvidia GPU supercomputer

Nvidia is supplying a C based programming model (CUDA) and AMD has an assembly language based system (CTM). The programming models will let developers get pretty good results right away but fine tuning by experts will speed things up by 5X or more.

These machines are inferior to regular supercomputers for memory bandwidth and some other factors. However, one could buy these systems and try to find ways to beef up the memory with enterprise versions of solid state flash harddrives at a fairly affordable price to lessen the weaknesses in this area.

The developer documentation and samples that nvidia provides. Available on the new GeForce 8800 graphics card and future Quadro Professional Graphics solutions, NVIDIA claims computing with CUDA overcomes the limitations of traditional GPU stream computing by enabling GPU processor cores to communicate, synchronize, and share data.

Open source Openvidia libraryfor GPUs

Wikipedia on the state of General Purpose GPUs

Intel is demonstrating their 80-core chip now and hope to release it in 2009

The NVIDIA Tesla computing pages

What it would take for zettaflop computing

China is building three petaflop computers by 2010

Japan is building a 10 petaflop machine by 2011

Other petaflop projects including the completed MDGrape3

Flash memory improving faster than Moore’s law will accelerate larger database searches

Other things going faster than Moore’s Law

which includes gene sequencing costs

Advertising

Trading Futures

Nano Technology

Netbook Technology News

Computer Software

Future Predictions

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

Comments are closed.